Getting ChatGPT to turn a flat vocabulary list into a hierarchical taxonomy

ChatGPT-3, Chat GPT-4.

I was catching up with my old friend Paul Prescod the other day. We have not only known each other since the early days of XML, but actually before that: “since XML was a four-letter word”, to quote Paul.

One current popular topic we discussed is where LLM tools such as ChatGPT can add value in the data pipelines that we have worked with. We’ve all seen blog posts where people got ChatGPT to create code in their favorite languages; Paul and I, as always, were focused on how it could improve content and content metadata. I’ve often said that the point of metadata is to add value to content, so automating the creation of useful metadata is automating the addition of value to content.

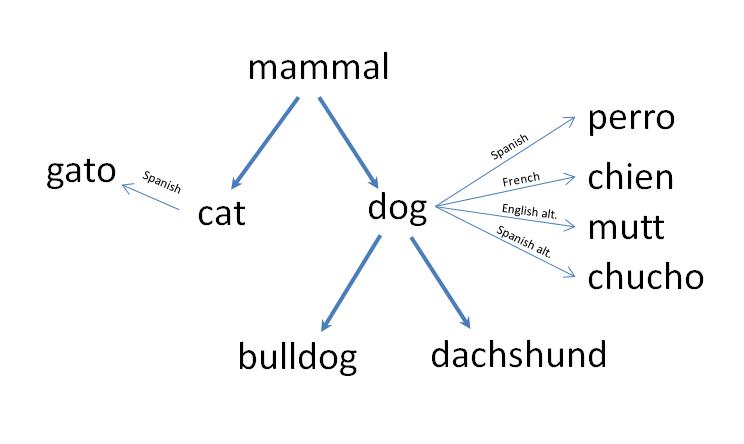

Automating the assignment of keyword terms from a controlled vocabulary to content, in order to improve content findability, has been a classic goal for decades. While talking to Paul, I wondered whether the controlled vocabulary itself could be improved by ChatGPT, specifically by turning flat lists into hierarchies.

How does this add value? Imagine that Sidney at the hypothetical Snee Company stores a picture of Lassie tagged as “Collie” in a CMS, and that term in the CMS’s taxonomy has a link to the broader term “Dog”. Taylor, another Snee employee, is writing an article about hints for taking your pets on vacation and searches the CMS for dog pictures. Sidney didn’t tag the Lassie picture as “Dog”, but the taxonomy-aware search engine knows that it shows a collie, which therefore makes it a dog, and returns that picture to Taylor. Taylor found a good picture for the article and has benefited from the value added by this piece of metadata.

I thought I’d create a controlled vocabulary of animal species and broader terms as a simple flat list and see how well ChatGPT-3 could impose some hierarchical structure on this by adding links such as the Collie-to-Dog one described above. Of course, I would have it use the RDF-based standard SKOS standard for controlled vocabularies, taxonomies, and thesauri.

A web search showed that Kurt Cagle, another old friend from XML’s early days, had given me a nice head start in his recent posting Nine ChatGPT Tricks for Knowledge Graph Workers. His list of animals (see “Example 8. Taxonomy Construction” in that article) sorted and indented the terms to show their hierarchy. I wanted to ChatGPT to do that work, so I made a copy of Kurt’s list, removed all the leading spaces, and sorted the lines into a random order. (Cool Linux command line tool I found for that: shuf.)

I then wrote a one-off Perl script that converted the list to SKOS Turtle RDF. All it said was that these were concepts with these labels. Here are the first 15 lines; the remainder follows the pattern shown:

@prefix skos: <http://www.w3.org/2004/02/skos/core#> .

@prefix d: <http://learningsparql.com/ns/data#> .

d:c1 a skos:Concept ;

skos:prefLabel "Tigers" .

d:c2 a skos:Concept ;

skos:prefLabel "Bears" .

d:c3 a skos:Concept ;

skos:prefLabel "Mammals" .

d:c4 a skos:Concept ;

skos:prefLabel "Primates" .

I wanted to see if ChatGPT could add triples such as d:c4 skos:broader d:c3. To summarize my result, the free ChatGPT-3 did OK, but not great; when Paul later tried it with ChatGPT-4, for which he is paying for a subscription, that did much better. Important ChatGPT lesson here: you get what you pay for.

Here is the prompt that I gave to ChatGPT-3:

Take the following set of Turtle RDF triples and add more triples that use skos:broader as their predicate. The new triples should show the hierarchical relationship of the existing terms.

Below that prompt I pasted the data that is excerpted above; you can see the whole thing at https://bobdc.com/miscfiles/simpleSKOS.ttl.

The system responded with my original RDF triples and the new ones that it generated based on what I asked for. The prefix declarations at the top were missing their angle brackets, so I added those to make it parse properly. I then wrote the following SPARQL query to process the returned RDF and show me a report that was more intuitive to read than statements like d:c4 skos:broader d:c3:

prefix skos: <http://www.w3.org/2004/02/skos/core#>

prefix d: <http://learningsparql.com/ns/data#>

SELECT ?narrowerLabel ?broaderLabel WHERE {

?narrower skos:prefLabel ?narrowerLabel ;

skos:broader ?broader .

?broader skos:prefLabel ?broaderLabel .

}

Here is the resulting report:

----------------------------------

| narrowerLabel | broaderLabel |

==================================

| "Mammals" | "Bears" |

| "Mammals" | "Tigers" |

| "Coyotes" | "Canines" |

| "Felines" | "Animals" |

| "Vertebrates" | "Animals" |

| "Ursines" | "Bears" |

| "Wolves" | "Canines" |

| "Animals" | "Vertebrates" |

| "Primates" | "Mammals" |

| "Chimpanzees" | "Primates" |

| "Canines" | "Mammals" |

| "Lions" | "Bears" |

| "Apes" | "Primates" |

| "Carnivores" | "Animals" |

| "Insectivores" | "Carnivores" |

| "Badgers" | "Carnivores" |

| "Panthers" | "Felines" |

| "Humans" | "Felines" |

----------------------------------

A few are completely wrong but it usually made sensible connections. As you can see, many of the connections are backward, like the first two.

Paul had much better luck doing the exact same thing with ChatGPT-4. He also did a lot of prompt refinement to get the system to explain why it did what it did. He has promised me that he will be writing that up soon, and when he does I will link to his writeup from here. It’s an interesting start to an answer for one of the important questions of 2023: “What useful work can I get Large Language Models to do for me?”

Comments? Reply to my tweet (or even better, my Mastodon message) announcing this blog entry.

Share this post